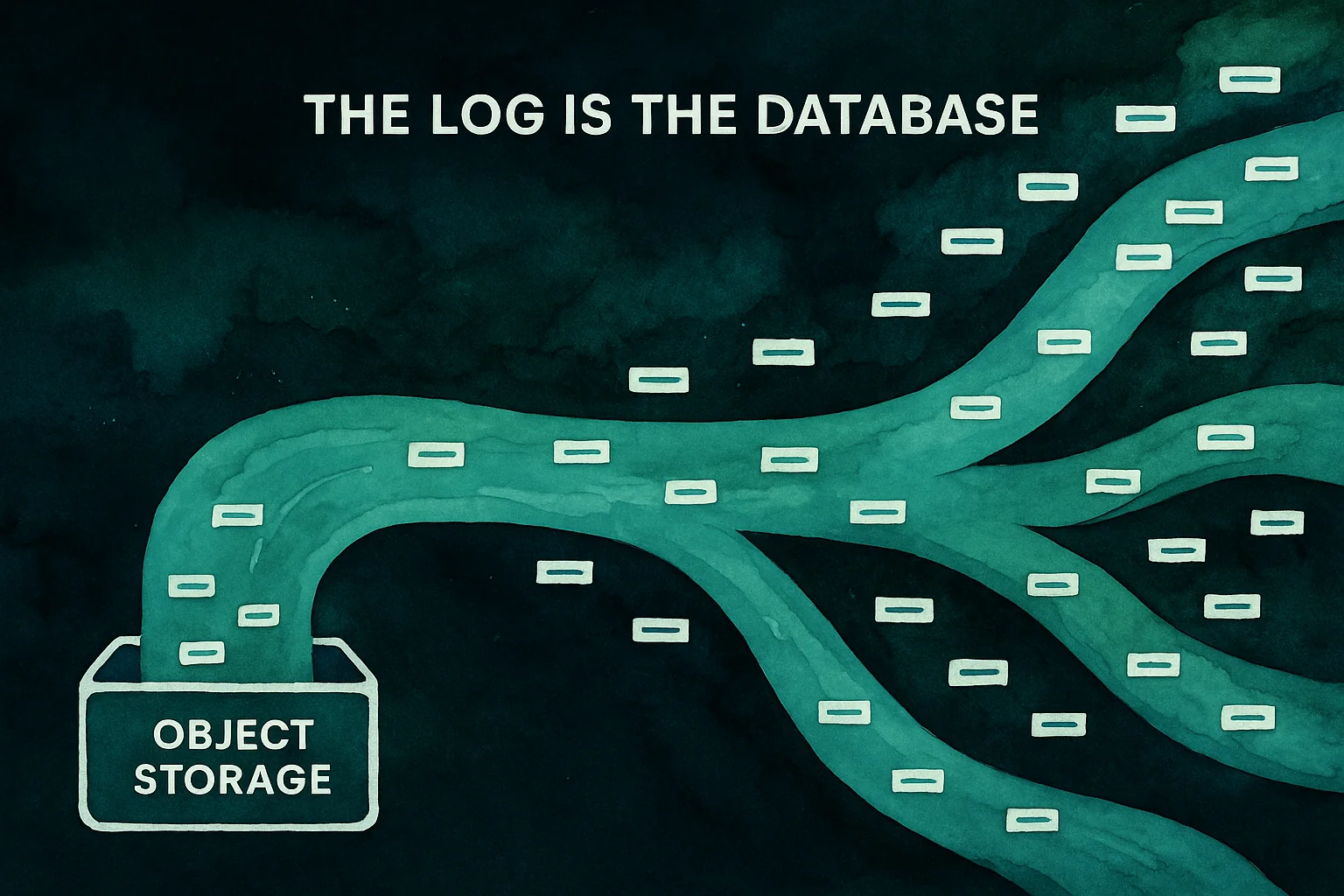

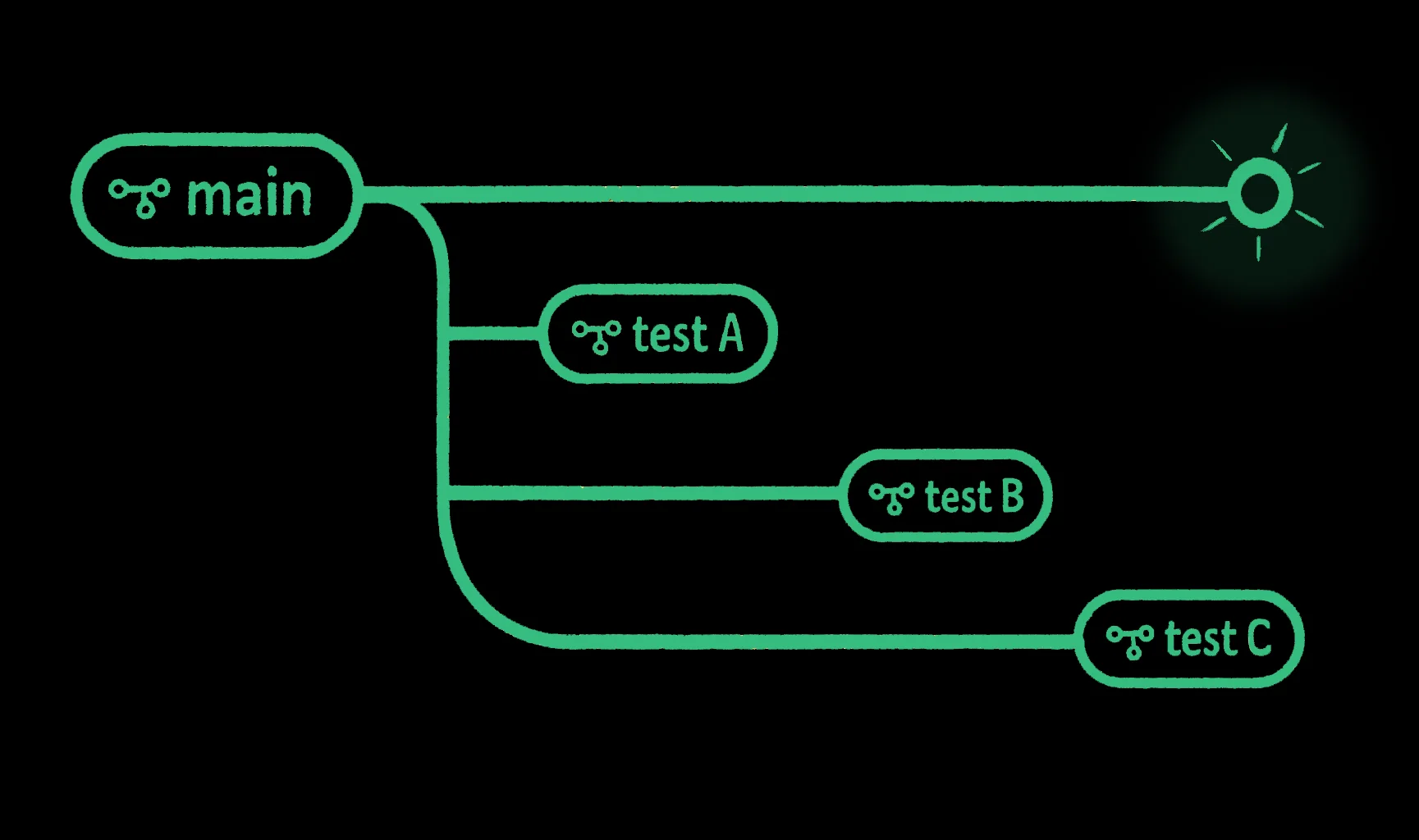

Fearless dataset experimentation with bucket forking

Fearless dataset experimentation with bucket forking

Fork buckets like code. Get instant, isolated copies of large datasets for AI training, experimentation, and multi-agent workflows. Achieve reproducibility, saf…

· 20 min read